Urban Analytics Lab

About us

We are introducing innovative methods, datasets, and software to derive new insights in cities and advance data-driven urban planning, digital twins, and geospatial technologies in establishing and managing the smart cities of tomorrow. Converging multidisciplinary approaches inspired by recent advancements in computer science, geomatics and urban data science, and influenced by crowdsourcing and open science, we conceive cutting-edge techniques for urban sensing and analytics at the city-scale. Watch the video above or read more here.

Established and directed by Filip Biljecki, we are proudly based at the Department of Architecture at the College of Design and Engineering of the National University of Singapore, a leading global university centered in the heart of Southeast Asia. We are also affiliated with the Department of Real Estate at the NUS Business School.

News

Updates from our group

Recent publications

Full list of publications is here.

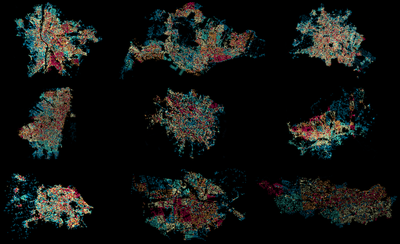

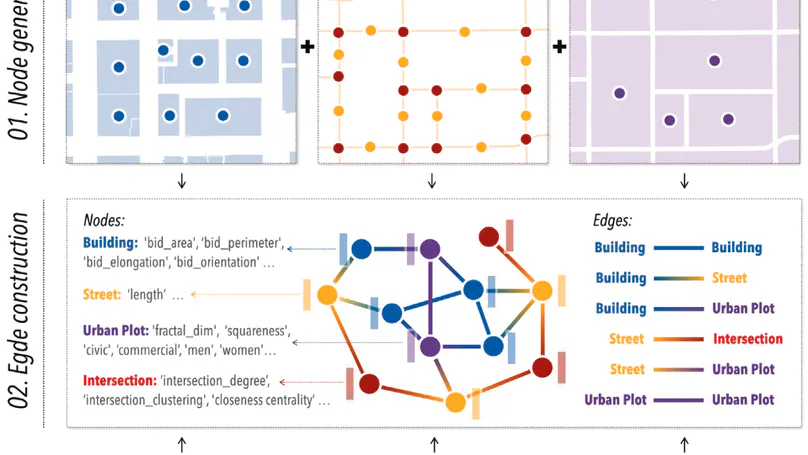

Data on building properties are essential for a variety of urban applications, yet such information remains scarce in many parts of the world. Recent efforts have leveraged instruments such as machine learning (ML), computer vision (CV), and graph neural networks (GNNs) to assess these properties at scale by leveraging urban features or visual information. However, extracting holistic representations to infer building attributes from multi-modal data across multiple spatial scales and vertical building characteristics remains a significant challenge. To bridge this gap, we present a innovative framework, that captures both hierarchical urban features and cross-view visual information through a heterogeneous graph. First, we construct a heterogeneous graph that incorporates multi-dimensional urban elements — buildings, streets, intersections, and urban plots — to comprehensively represent multi-scale geospatial features. Second, we automatically crop images of individual buildings from both very high-resolution satellite and street-level imagery, and introduce feature propagation on semantic similarity graphs to supplement missing facade information. Third, feature fusion is applied to integrate both morphological and visual features, with holistic representations generated for building attribute prediction. Systematic experiments across three global cities demonstrate that our method outperforms existing CV, ML, and homogeneous GNN-based models, achieving classification accuracies of 86% to 96% across 10 to 12 distinct building types, with mean F1 scores ranging from 0.70 to 0.73. The framework demonstrates robustness to class imbalance and produces more distinctive embeddings for ambiguous categories. In additional task of inferring building age, the method delivers similarly strong performance. This framework advances scalable approaches for filling gaps in building attribute data and offers new insights into modeling holistic urban environments. Our dataset and code are available openly at: https://github.com/seshing/HeteroGNN-building-attribute-prediction.

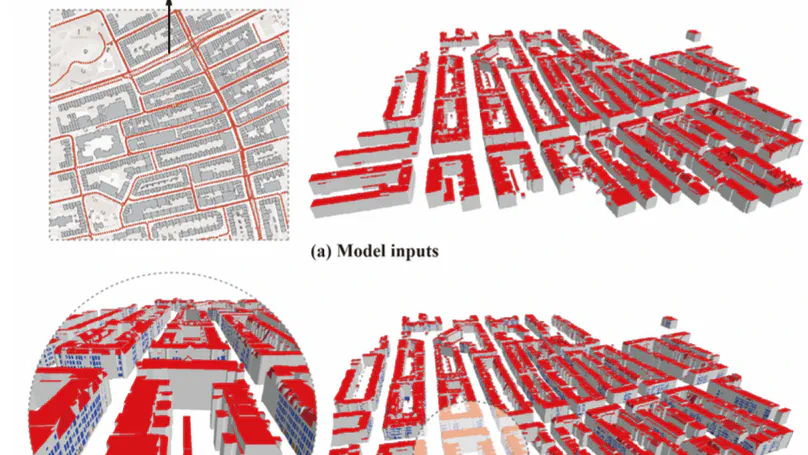

The availability of 3D building models has been increasing, but they often lack detail at the architectural scale. This paper presents a method for reconstructing façade openings in 3D building models by integrating Street View imagery (SVI). Methodologically, the paper advances opening reconstruction in two key ways: first, by introducing a mathematically derived method for estimating unknown intrinsic camera parameters, enabling metric 2D-to-3D projection without relying on multi-view imagery or pre-existing depth information. Second, the method extends single-image photogrammetry to accurately measure detailed façade openings, converting pixel coordinates into spatial coordinates. The proposed method is validated through case studies in Amsterdam. Quantitative evaluation using the Façade Re-projection Dice Score (FRDS) shows high spatial consistency between reconstructed openings and reference opening geometries, with most scores ranging from 0.84 to 0.98. Given the broad coverage of SVI, there is a significant potential for enhancing 3D city models in diverse urban contexts where current representations remain geometrically basic.

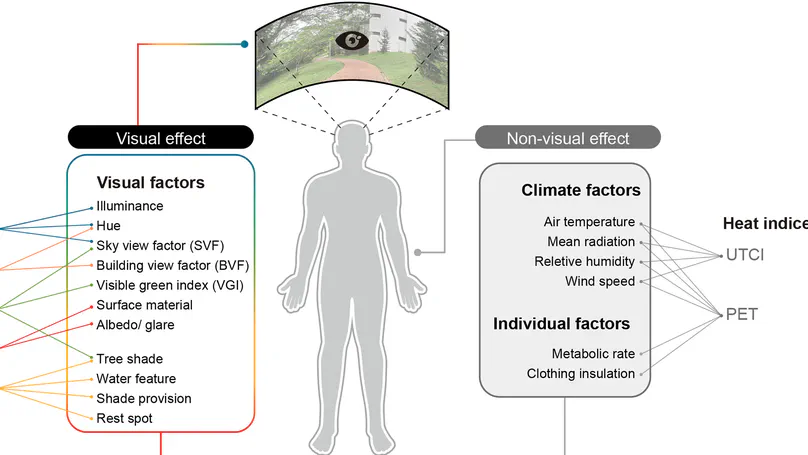

Outdoor thermal comfort is a crucial determinant of urban space quality. While research has developed various heat indices, such as the Universal Thermal Climate Index (UTCI) and the Physiological Equivalent Temperature (PET), these metrics fail to fully capture perceived thermal comfort. Beyond environmental and physiological factors, recent research suggests that visual elements significantly drive outdoor thermal perception. This study integrates computer vision, explainable machine learning, and perceptual assessments to investigate how visual elements in streetscapes affect thermal perception. To provide a comprehensive representation of diverse visual elements, we employed multiple computer vision models (viz. Segment Anything Model, ResNet-50, and Vision Transformer) and applied the Maximum Clique method to systematically select 50 representative ground-level images, each paired with a corresponding thermal image captured simultaneously. An outdoor, web-based survey among 317 students collected thermal sensation votes (TSV), thermal comfort votes (TCV), and element preference data, yielding 2,854 valid responses. The same survey was replicated in an indoor exhibition setting to provide a comparative reference against the outdoor experiment. A Random Forest classifier achieved 70% and 68% accuracy in predicting thermal sensation and comfort, respectively. Using Shapley Additive Explanations to interpret model outcomes, we uncovered that the colour magenta emerged as the most influential visual factor for thermal perception, while greenery – despite being participants most preferred element for cooling – showed weaker correlation with actual thermal perception. These findings challenge conventional assumptions about visual thermal comfort and offer a novel framework for image-based thermal perception research, with important implications for climate-responsive urban design.

Urban areas stand at the forefront of the climate crisis, facing escalating environmental pressures, growing social inequalities, and heightened risks to human health and well-being. These challenges are especially pronounced in rapidly expanding cities across the Global South, where informal settlements, resource constraints, and inadequate infrastructure amplify vulnerabilities. Conventional urban planning and management approaches, developed prior to recent advances in data-intensive urban analysis, are increasingly unable to address the complexity, scale, and dynamism of these issues.

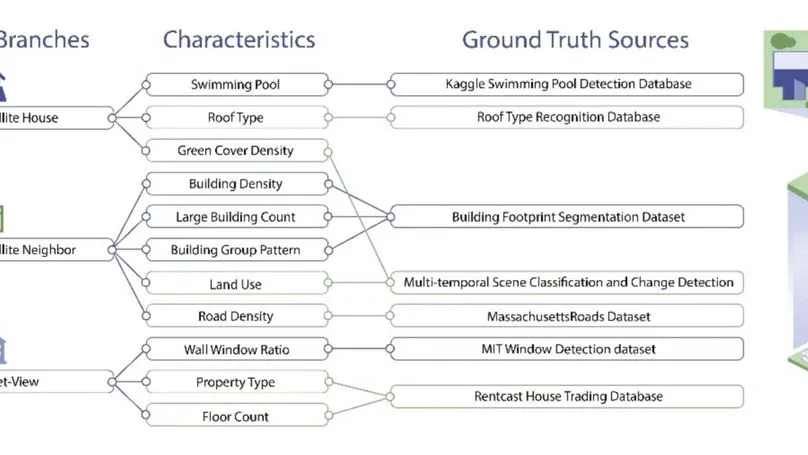

Buildings play a crucial role in shaping urban environments, influencing their physical, functional, and aesthetic characteristics. However, urban analytics is frequently limited by datasets lacking essential semantic details as well as fragmentation across diverse and incompatible data sources. To address these challenges, we conducted a comprehensive meta-analysis of 6,285 publications (2019–2024). From this review, we identified 11 key visually discernible building characteristics grouped into three branches: satellite house, satellite neighborhood, and street-view. Based on this structured characteristic system, we introduce BuildingMultiView, an innovative framework leveraging fine-tuned Large Language Models (LLMs) to systematically extract semantically detailed building characteristics from integrated satellite and street-view imagery. Using structured image–prompt–label triplets, the model efficiently annotates characteristics at multiple spatial scales. These characteristics include swimming pools, roof types, building density, wall–window ratio, and property types. Together, they provide a comprehensive and multi-perspective building database. Experiments conducted across five cities in the USA with diverse architecture and urban form, San Francisco, San Diego, Salt Lake City, Austin, and New York City, demonstrate significant performance improvements, with an F1 score of 79.77% compared to the untuned base version of ChatGPT’s 45.66%. These results reveal diverse urban building patterns and correlations between architectural and environmental characteristics, showcasing the framework’s capability to analyze both macro-scale and micro-scale urban building data. By integrating multi-perspective data sources with cutting-edge LLMs, BuildingMultiView enhances building data extraction, offering a scalable tool for urban planners to address sustainability, infrastructure, and human-centered design, enabling smarter, resilient cities.

Contact

- [email protected]

- SDE4, NUS College of Design and Engineering, 8 Architecture Dr, Singapore, Singapore 117564

- X