Abstract

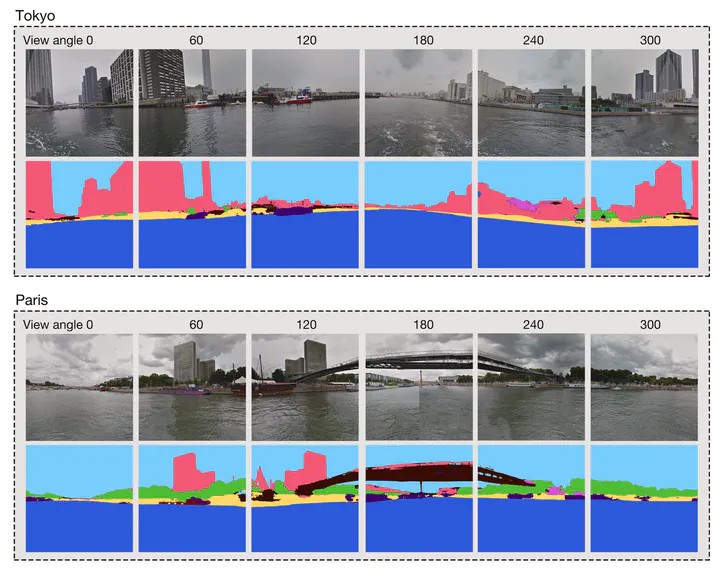

Gathering knowledge about physical settings and visual information of places has long been of interest to a wide variety of fields as they affect the experience of observers. Previous studies have relied on on-site surveys, low-throughput methods, and limited data sources, which especially hinder analyzing waterscape features. Thus, detecting the relationships between the human perception results of large-scale urban water areas and the waterfront features at high spatial resolutions remains challenging, and worldwide studies have not been conducted. We investigate an alternative: a data-driven waterscapes evaluation approach based on computer vision (CV) to analyze water view imagery (WVI) in 16 cities around the world and measure how people perceive scenes using virtual reality (VR). We bring attention to WVI – the counterpart of street view imagery (SVI) on water bodies, which is readily available for many cities thanks to the usual SVI services, but has been entirely overlooked in research hitherto. Specifically, a deep learning model, which has been trained with 500 segmented water-level photos, was developed to analyze them, achieving the mean pixel accuracy (MPA) of 94%, which advances state of the art. These panoramic images have been assessed through a virtual experience survey in which 60 participants indicated their perceptions across multiple dimensions. Afterwards, a series of statistical analyses were conducted to determine the visual indicators that drive perceptions, and the relationship between the people’s subjective visual perceptions and objective waterscape environment as seen by machines has been established. The results take researchers and watercourse planners one step toward understanding the interactions of the perceptions and semantics of water areas globally. The large-scale dataset we produced in this research has been released openly as the first such instance of open segmented water view imagery, and it is intended to support future studies.